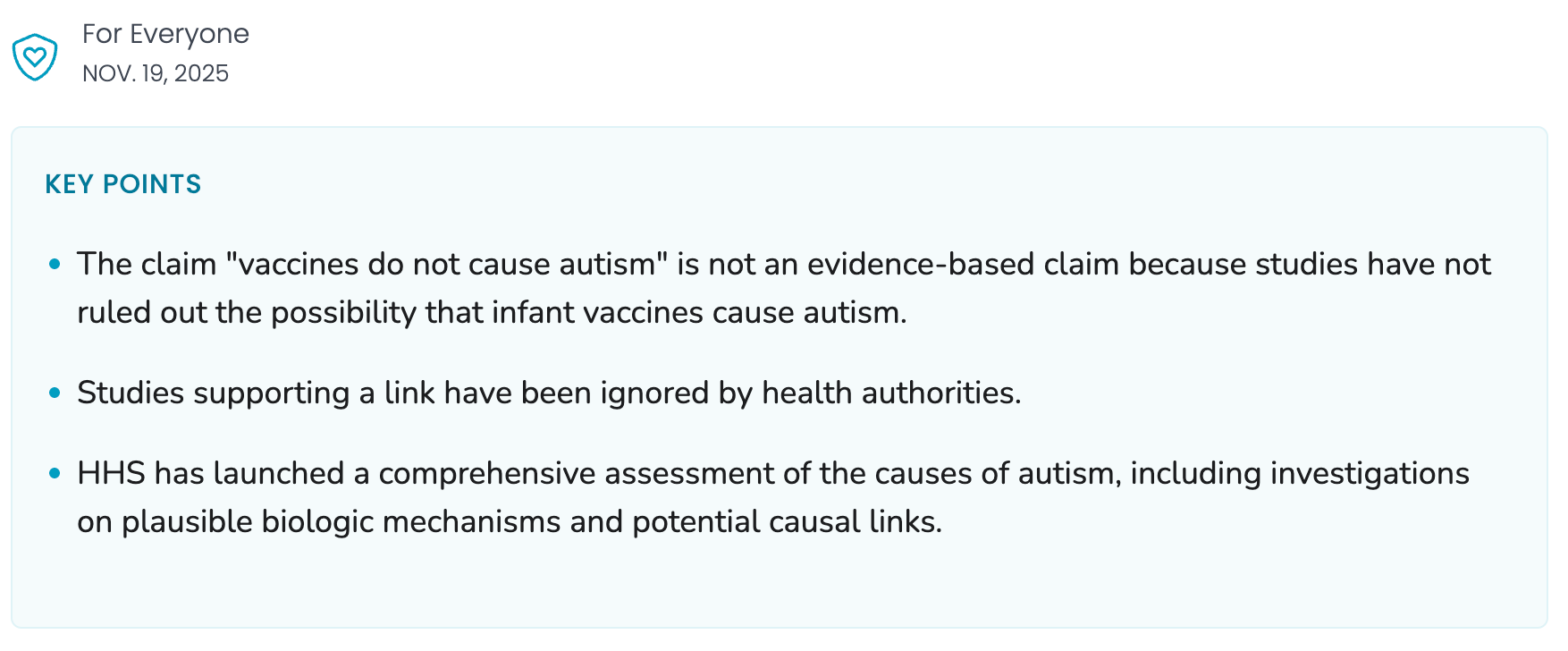

I've spent the past year wondering when the CDC's website is going to stop being a source that I trust. We made it almost the whole year! The CDC's webpage on vaccines and autism was fine, citing studies that debunked the idea that the two are linked. But in November, the page was updated with this big banner on top:

What follows is a long, poorly done justification for the need to pursue a link between vaccine and autism. You can read more about it at the New York Times here.

I hate it. I hate it for straightforward reasons that the CDC is meant to convey trustworthy information about things that will affect people's lives, and this is not trustworthy information about things that will affect people's lives. I hate it for an incoherent, mess of thoughts about our need to grapple with the uncertainties of science against a cultural backdrop that understandably seeks comfort in evidence. I hate it with a specific anger for powerful people who cynically co-opt the limitations of science for their own fun and profit.

I also hate it for the very petty reason that it makes my job harder.

I should say that I have a very fun job. I spend hours and hours each week looking up cool science facts on the internet. Right now, my search history includes things like, "can you punch a tardigrade," "how big is an emu's wing," and "what was the first clinical trial in history." It's fun!"

When it comes to any work I'm doing that relates to medical conditions, the CDC has been a great resource. The language on their website is clear and well-organized, the facts and statistics are helpful, and any illustrations clarify the information and makes it even more accessible. These are materials that have thoughtfully put together to help people understand complex medical issues.

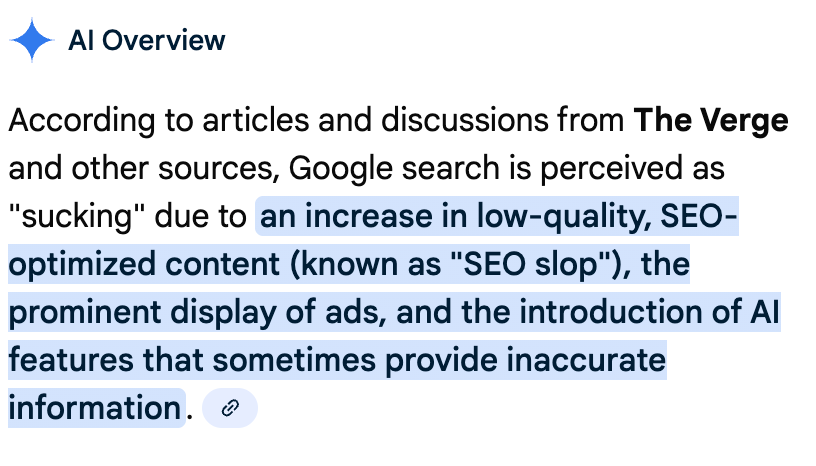

The (former) reliability of sources like the CDC has been especially important in the past few years because as you have probably noticed, search kind of sucks now. I'll let Google AI helpfully explain it for me (I was googling "verge why does google search suck" to see if they have a helpful article on the subject).

I'm sure there are a lot of very good reasons to explain this, but honestly, that's not what I'm here to do. I'm here to be angry and whine and complain and offer no helpful solutions, and then to have an existential crisis. We're about halfway through the itinerary.

The point is that google search has become terrible in ways that I can't fully articulate, but that I can feel. I used to be able to easily and reliably find solid sources for even the most random science questions I had to research. Now, I mostly get Quora posts that are a total mishmash of words, plus some Reddit threads of varying degrees of relevance and reliability. And when I search for scientists to interview for an episode of Tiny Matters, Google makes it seem like there's just no one out there. For some projects, desperation has made me turn to ChatGPT. Sometimes it helps. Sometimes it lists scientists who I would love to talk to except that they don't exist.

And moreover, I resent that I have to turn to ChatGPT. What I miss about the version of Google that exists in my memory is that it gave me good sources that I could sift through and make decisions about. Using AI as a search engine puts a barrier between me and the information that makes it hard for me to sift. Yes, I can work with it to get closer and closer to what I'm looking for, but there is still a feeling that this is out of my hands, and I hate that. And that's not even getting into the many, many, many aggravating implications of AI in terms of the environment and intellectual property.

But also, AI is part of the thing that's making the internet worse, and this is where we get into the existential crisis of it all. This Kurzgesagt video is a great overview of how pervasive the bad information perpetuated by AI is becoming.

And on top of that video, I've had this article stuck in my head for a while. It's about an editor realizing that one of the awesome freelance pitches they got was maybe just a little too good to be true.... It's fascinating, and I don't want to spoil it all, so you should go read it. In some ways, there's an element of the scientific method story to this story: someone realized something didn't make sense, questioned it, and figured out a whole mystery. But also, AI is making it even even easier for good sources to become unreliable.

I love my job, and when I read things like this, there's a part of me that optimistically says, "See, this is what makes what I do so important!" I have to be part of whatever group of people there is on the internet who tries to make sure there's good information here. Then there's a nihilistic side that's like, "lolol what a bunch of self-important bullshit." Like most internal conflicts, there's really no reconciling the two.

When it comes to making things, there's the combination of financial/creative/professional obligations that drive your work, and then there's everything else that happens around you that shapes the way it's received. You don't get to control how people take it. And while that's the worst part of creating things, it can also be the most rewarding. It's how you get interpretations of your work that can frustrate you, challenge you, and lead you to new ideas.

This lack of control is also, I think, an eternal crisis for science communication. In the time that I've done this job, I've been a part of so many conversations about how do we convey uncertainty better, how do we get people to care about this crisis, how do we get people to understand the myriad nuances around how science answers and doesn't answer questions? But I've also increasingly found myself checked out of those conversation. There's nothing new, and they feel like they're in pursuit of something we want and will never get: control. We want control over how our work is taken, and we want control its impact on the world, and we will never get that.

And yet, I'm in crisis over that realization because it still matters how we say things. It matters that we try to get people to understand uncertainty and crises and nuance. And I believe that people want that for themselves too, it's just hard to sift through which uncertainties and crises and nuances matter when there's so much all the time. I guess I have to believe that or I don't have a job.

Anyway, I'm trying to end on a positive, or at least a constructive note, so here it is. I posted about the CDC posting misinformation on bluesky, and someone asked if I have a trusted database of facts. That's a great question, but also potentially missing the point. There really isn't a good way to trust a singular source of information. In fact, I think you should never aim to put all your trust in one source. People, even well-intentioned ones, are ultimately still human. They make mistakes. Shocking as it is, I have made mistakes! I have put incorrect information on the internet, and it's a shitty feeling, but it's very easy to do!

So instead, what I find most helpful are guiding questions when I find a compelling fact or story.

- How did I find out about this fact?

- Do I usually go to this website, and are they generally trustworthy?

- Do I know who wrote this, and are they generally trustworthy?

- Was this information fact-checked or peer-reviewed in any way?

- Does this fact satisfying some internal narrative I have about the world, particularly one that feels very emotional?

- Can I find other people writing about this fact? And can I trace the fact back to its original source? (this one is particularly important for historical anecdotes)

There's no one way to decision-tree your way through this. The way you answer these questions will shape the way you will feel about the fact, which will inform the trust or confidence you can place in it. I'm phrasing it this way because as annoying as it is (and as much as I think that there are sources you should objectively trust more than others), trust and confidence are subjective experiences. They're also dynamic experiences. Sometimes you trust a source one day, and the next day you don't.